0xAB

Andrei Barbu

Andrei is a research scientist at MIT working on natural language processing, computer vision, and robotics, with a touch of neuroscience.

Computer vision datasets are too easy!

Easy for humans, hard for machines

How hard is a dataset like ImageNet? Everything we do in computer vision depends on our datasets. There’s really no theory of vision left. Machine learning for computer vision is a purely empirical science at the moment. So you would think that we would be able to answer a simple question like that: how hard is ImageNet? Until just recently we had no idea. The answer is surprising and part of a long-term mystery our group has been working to unravel.

It all starts with computer vision benchmarks. On benchmarks machines work incredibly well. Sometimes machines are even superhuman! Yet, in the real world, machine performance is pretty mediocre. Why do we have this massive gap? This isn’t a normal state of affairs for a science. Engineers who build bridges don’t expect there to be a huge difference between what their calculations say and real-world performance: reasonable safety margins don’t lead to massive bridge collapses.

We’ve already tried to answer this question before. Maybe machines are cheating

by taking advantage of correlations in our datasets. Like the fact that plates

tend to appear in kitchens. That’s what

ObjectNet is about

(

Citation: Barbu, Mayo

& al., 2019

Barbu,

A.,

Mayo,

D.,

Alverio,

J.,

Luo,

W.,

Wang,

C.,

Gutfreund,

D.,

Tenenbaum,

J. & Katz,

B.

(2019).

Objectnet: A large-scale bias-controlled dataset for pushing the limits of object recognition models.

Advances in neural information processing systems (NeurIPS), 32.

objectnet.dev

@article{barbu2019objectnet,

title={Objectnet: A large-scale bias-controlled dataset for pushing the limits of object recognition models},

author={Barbu, Andrei and Mayo, David and Alverio, Julian and Luo, William and Wang, Christopher and Gutfreund, Dan and Tenenbaum, Josh and Katz, Boris},

journal={Advances in neural information processing systems (NeurIPS)},

volume={32},

year={2019},

URL={objectnet.dev}

}

)

: create a

better datasets to highlight weaknesses of current datasets. But, is ObjectNet

really harder for humans? And as a whole, are our datasets easy or hard? Is

solving current datasets enough to convince you that a machine is human-level?

To answer this question we developed a method to compute the difficulty of an

image and of an image dataset

(

Citation: Mayo, Cummings

& al., 2023

Mayo,

D.,

Cummings,

J.,

Lin,

X.,

Gutfreund,

D.,

Katz,

B. & Barbu,

A.

(2023).

How hard are computer vision datasets? Calibrating dataset difficulty to viewing time.

@article{mayo2023hard,

title={How hard are computer vision datasets? Calibrating dataset difficulty to viewing time},

author={Mayo, David and Cummings, Jesse and Lin, Xinyu and Gutfreund, Dan and Katz, Boris and Barbu, Andrei},

year={2023}

}

)

. Before we talk about how this works, let’s get to

the surprising results. ImageNet and ObjectNet are both too easy! The vast

majority of computer vision datasets appear to be easy. So when someone says

that their model gets 95% on ImageNet, remember that about 90% of that number is

being computed on images that are simple for humans to recognize.

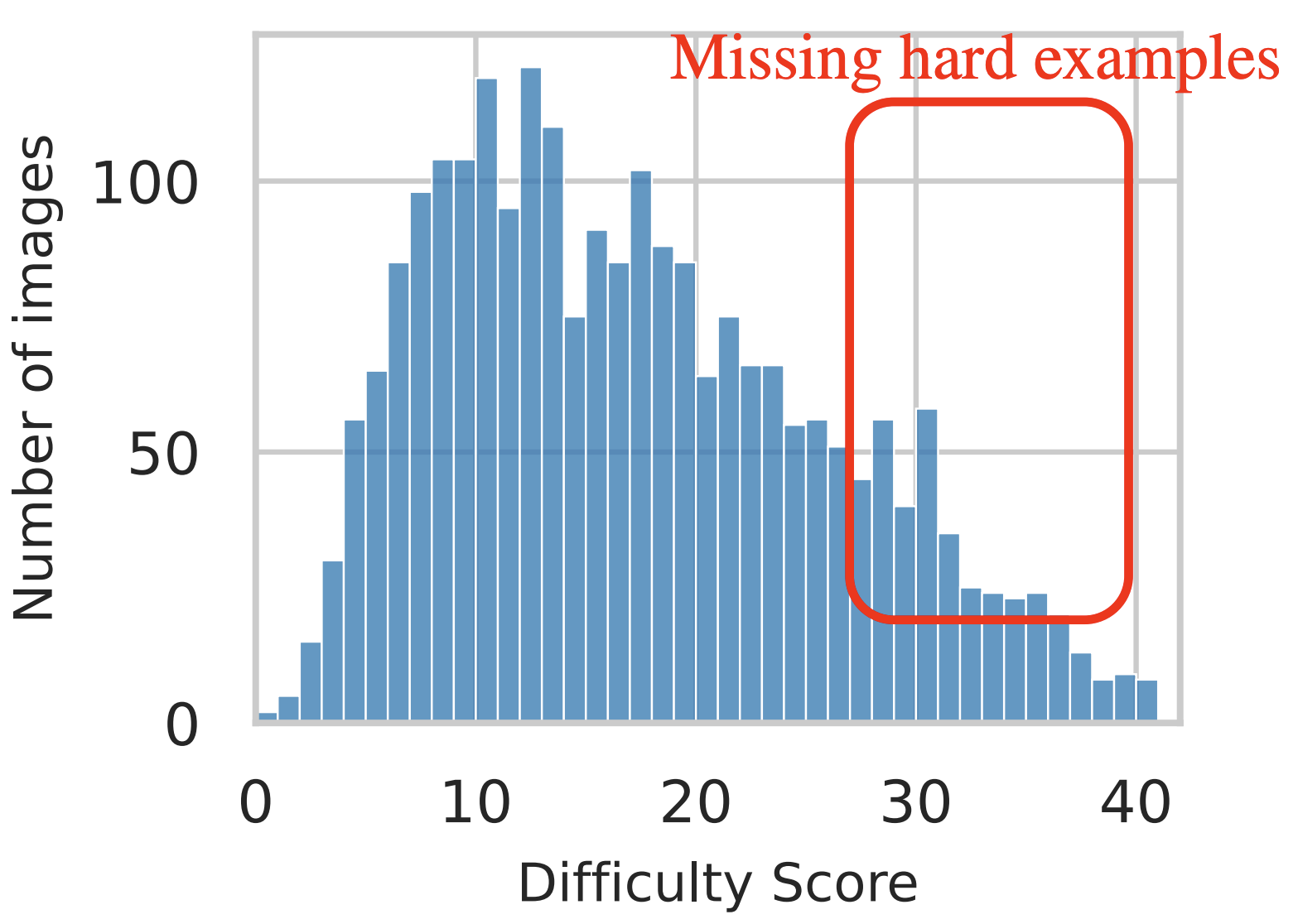

The ImageNet difficulty score. ImageNet images are overwhelmingly easy. Almost 90% of images are easy for humans.

We need to take difficulty more seriously as a community. Right now, many benchmarks are being saturated. There really isn’t much room to make major improvements when you’re already at 90+% accuracy. But if all we did was take our benchmarks, compute their difficulty and report performance over the hard images, all of a sudden you have a hard task again with massive room for improvement.

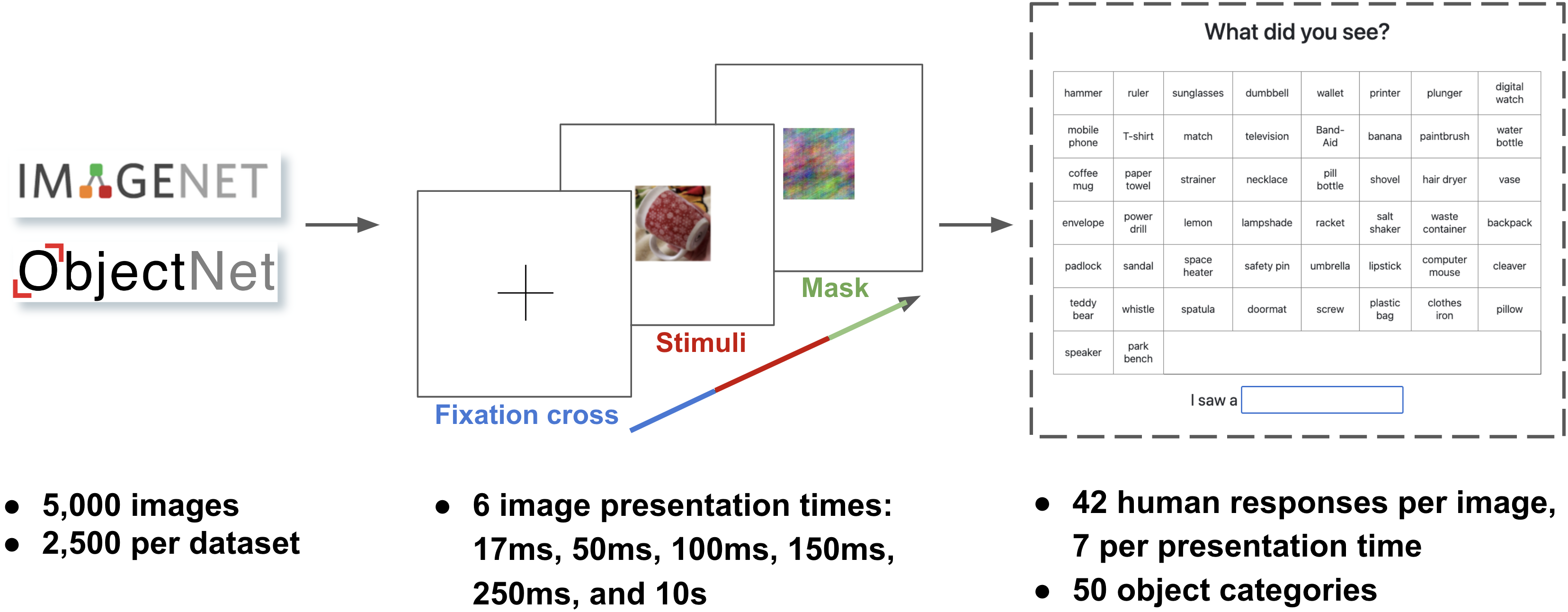

So how we measure difficulty? Using Minimum Viewing Time (MVT). How many ms must an image be on screen before a human can recognize the object in that image?

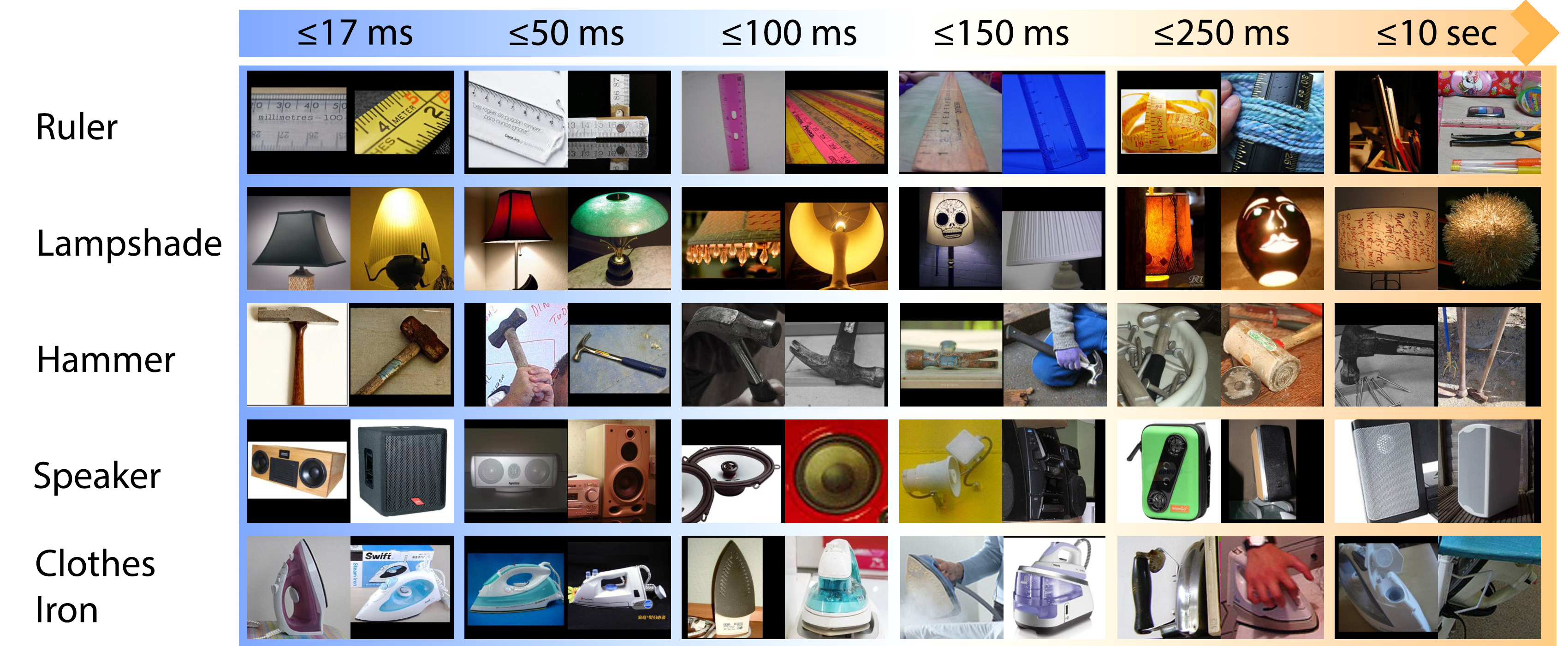

The results look very reasonable. The images on the left look more prototypical while the harder ones on the right look more unusual.

Image difficulty brings up many other questions. What other tasks could we do this for? What happens in our brains when we look at hard images? Can machines estimate difficulty and make use of it to process images more efficiently? There’s no notion of time when machines process images. An easy and hard image both require the same amount of machine time. While humans obviously manage to gain 10x efficiency by exploiting difficulty.

- Barbu, Mayo, Alverio, Luo, Wang, Gutfreund, Tenenbaum & Katz (2019)

-

Barbu,

A.,

Mayo,

D.,

Alverio,

J.,

Luo,

W.,

Wang,

C.,

Gutfreund,

D.,

Tenenbaum,

J. & Katz,

B.

(2019).

Objectnet: A large-scale bias-controlled dataset for pushing the limits of object recognition models.

Advances in neural information processing systems (NeurIPS), 32.

objectnet.dev

@article{barbu2019objectnet,

title={Objectnet: A large-scale bias-controlled dataset for pushing the limits of object recognition models},

author={Barbu, Andrei and Mayo, David and Alverio, Julian and Luo, William and Wang, Christopher and Gutfreund, Dan and Tenenbaum, Josh and Katz, Boris},

journal={Advances in neural information processing systems (NeurIPS)},

volume={32},

year={2019},

URL={objectnet.dev}

} - Mayo, Cummings, Lin, Gutfreund, Katz & Barbu (2023)

-

Mayo,

D.,

Cummings,

J.,

Lin,

X.,

Gutfreund,

D.,

Katz,

B. & Barbu,

A.

(2023).

How hard are computer vision datasets? Calibrating dataset difficulty to viewing time.

@article{mayo2023hard,

title={How hard are computer vision datasets? Calibrating dataset difficulty to viewing time},

author={Mayo, David and Cummings, Jesse and Lin, Xinyu and Gutfreund, Dan and Katz, Boris and Barbu, Andrei},

year={2023}

}